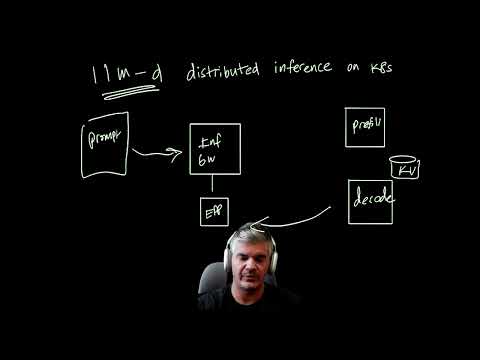

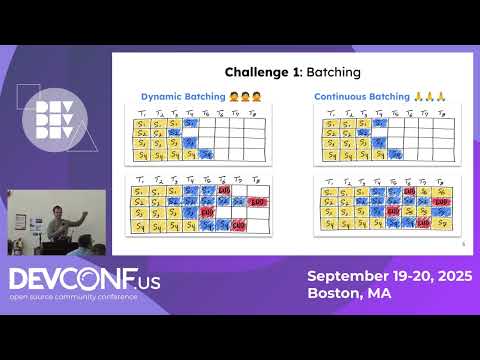

In this session, we explored the latest updates in the vLLM release, including the new Magistral model, FlexAttention support, multi-node serving optimization, and more. We also did a deep dive into llm-d, the new Kubernetes-native high-performance distributed LLM inference framework co-designed with Inference Gateway (IGW). You'll learn what llm-d is, how it works, and see a live demo of it in action. Session slides: Join our bi-weekly vLLM office hours:

- 2955Просмотров

- 5 месяцев назадОпубликованоNeural Magic

[vLLM Office Hours #27] Intro to llm-d for Distributed LLM Inference

Похожее видео

Популярное

Лихач 7-9

Сериал я жив

ббурное безрассудство 2

Китай

Жена чиновника 10 серия

Бурное безрассудство 1

Disney channel russian

g major 26 turn normal

Nickelodeon ukraine

Баскервиллей

Потерянный снайпер 7

https:/www.google.com/url

Disney channel

Handy manny Russisch

сериал

Universal g major 4

врумиз реклама

ну погоди 18 выпуск

УЧЕНИК САНТЫ

Preview 2 stars in the sky v7

Чудо

Край бебіс

Сериал я жив

ббурное безрассудство 2

Китай

Жена чиновника 10 серия

Бурное безрассудство 1

Disney channel russian

g major 26 turn normal

Nickelodeon ukraine

Баскервиллей

Потерянный снайпер 7

https:/www.google.com/url

Disney channel

Handy manny Russisch

сериал

Universal g major 4

врумиз реклама

ну погоди 18 выпуск

УЧЕНИК САНТЫ

Preview 2 stars in the sky v7

Чудо

Край бебіс

Новини